In some cases, while downloading files from a remote server, or just ping URL or a web page using cURL command, we get a fail message i.e. Error 403 The access is forbidden error.

The most reason comes, when the server applies some integrity checks for the user client app before allowing it to access the server contents or applying web firewall rules.

Any way we can apply the following adjustments to the cURL CLI to emulate the web browser as possible, to use in some specific request cases.

Prepare CURL Header Parameters

You can prepare cURL command parameters as the following

cURL and Collect browser data

1- Visit your target web page from a chromium-based desktop web browser as Google-Chrome.

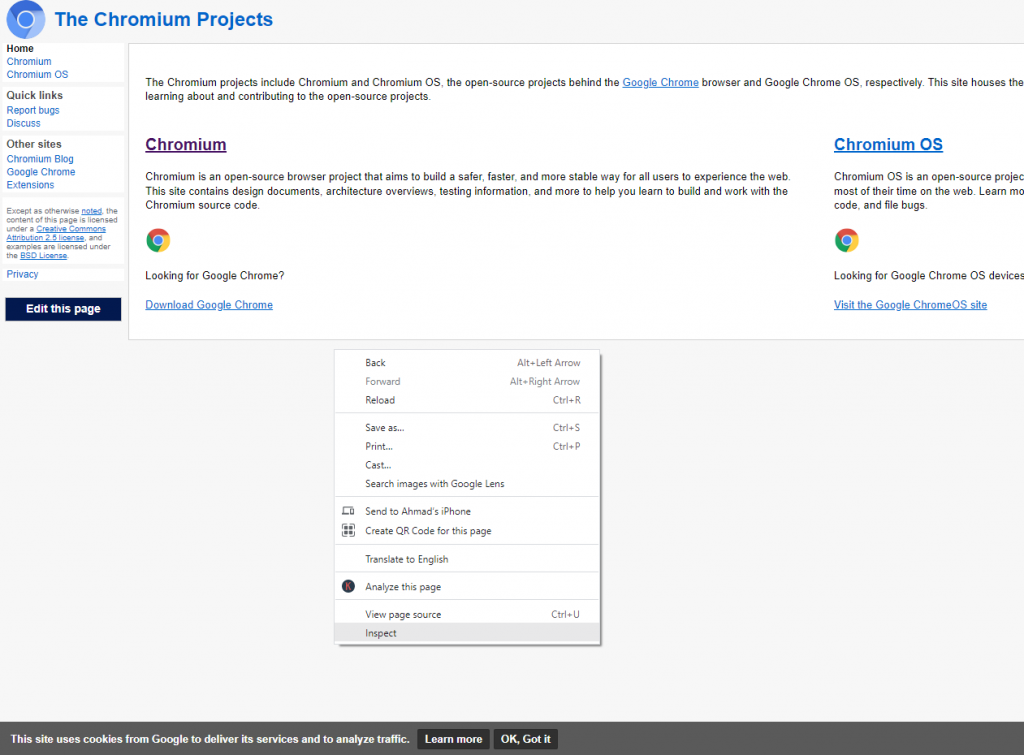

2- Right-Click on the web page, and select “Inspect” from the appearing context menu, as the example shown in the below screenshot. which will open the chrome Developer toolbar.

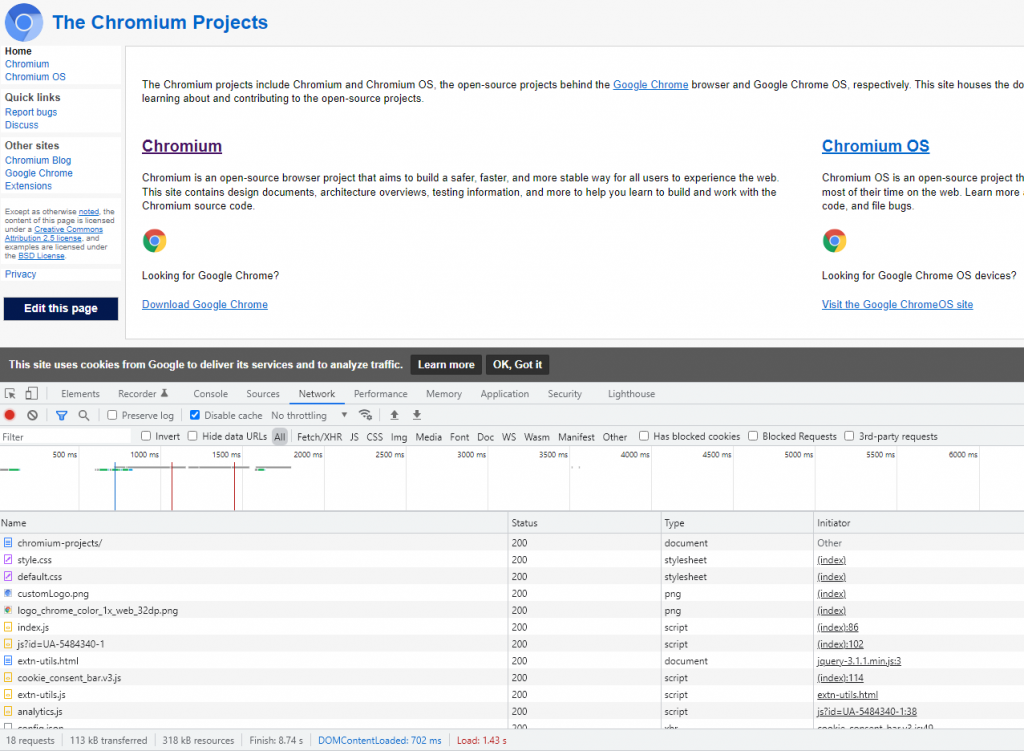

3- From the Developer toolbar select the “Network” tab, Then refresh your page again while the “Network” tab is active.

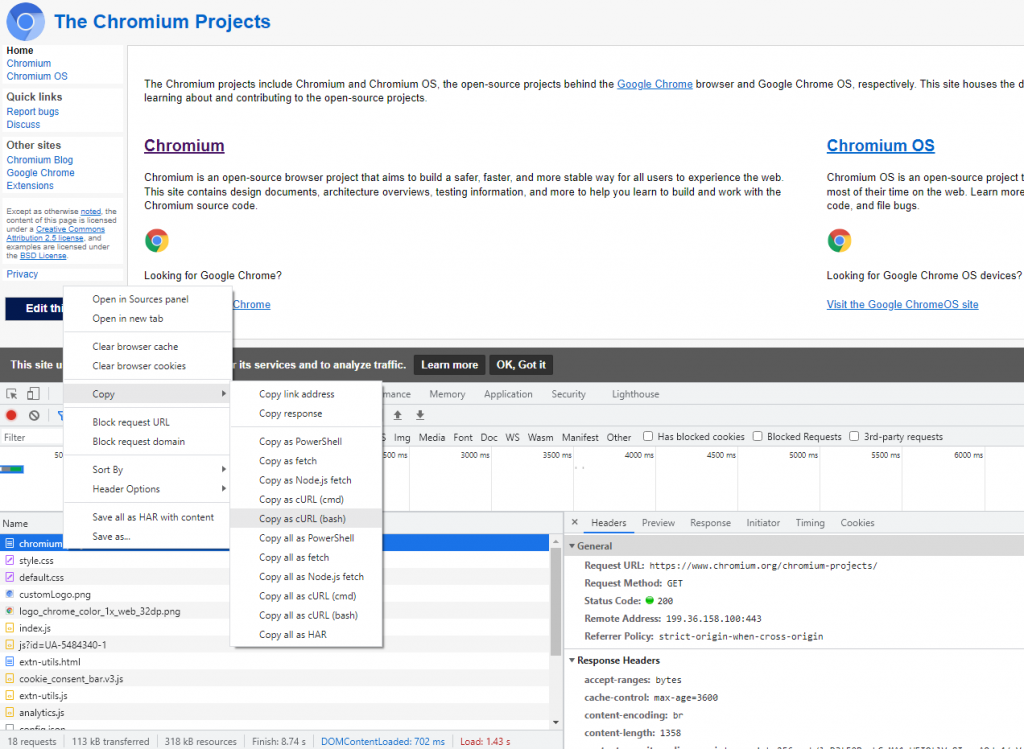

4- Press on the first request URL from the “Network” tab Under the “Name” label, and Right-Click Then Select “Copy” from the pop-up menu, Then “Copy as cURL (bash)” as shown in the below screenshot.

The copies request will be the same as the outputs here

curl 'https://www.chromium.org/chromium-projects/' \ -H 'authority: www.chromium.org' \ -H 'pragma: no-cache' \ -H 'cache-control: no-cache' \ -H 'sec-ch-ua: " Not A;Brand";v="99", "Chromium";v="98", "Google Chrome";v="98"' \ -H 'sec-ch-ua-mobile: ?0' \ -H 'sec-ch-ua-platform: "Windows"' \ -H 'upgrade-insecure-requests: 1' \ -H 'user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36' \ -H 'accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9' \ -H 'sec-fetch-site: same-origin' \ -H 'sec-fetch-mode: navigate' \ -H 'sec-fetch-user: ?1' \ -H 'sec-fetch-dest: document' \ -H 'referer: https://www.chromium.org/' \ -H 'accept-language: en-US,en;q=0.9,ar;q=0.8,tr;q=0.7' \ -H 'cookie: _ga=GA1.2.579855328.1643135453; _gid=GA1.2.1955147752.1645531691' \ --compressed

As we can see, here we collect good cURL header parameters to use in our command at the CLI terminal, It includes a Browser User-agent, Accept-Encoding, and a true Cookies value.

cURL Add Proxy Parameters

We can append the proxy parameters to the cURL output above as the following

--proxy http://proxy_server:proxy_port --proxy-user proxyusername:proxypassword

When using Socks Proxy

--socks5 socks_server_ip:socks_port --proxy-user proxyusername:proxypassword

cURL Skip SSL Certificate Verification

We can append the -k / –insecure parameter to skip the cURL SSL Certificate Verification and allow insecure server connections when using SSL URLs.

--insecure

cURL Allow Cookies

To allow a website application to write cookies on our side, we will append –cookie-jar parameter with the path to the cookie file where to store the cookie value.

--cookie-jar /path/to/cookiefile.txt

And we can send a cookie value to the server-side with our request by pointing to the same cookie file too.

--cookie /path/to/cookiefile.txt

cURL User-Agent

We can send the User-Agent string to the server-side as an individual parameter, by appending the following to our command.

--user-agent "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36"

The Complete cURL Command

curl 'https://www.chromium.org/chromium-projects/' \ -H 'authority: www.chromium.org' \ -H 'pragma: no-cache' \ -H 'cache-control: no-cache' \ -H 'sec-ch-ua: " Not A;Brand";v="99", "Chromium";v="98", "Google Chrome";v="98"' \ -H 'sec-ch-ua-mobile: ?0' \ -H 'sec-ch-ua-platform: "Windows"' \ -H 'upgrade-insecure-requests: 1' \ -H 'user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36' \ -H 'accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9' \ -H 'sec-fetch-site: same-origin' \ -H 'sec-fetch-mode: navigate' \ -H 'sec-fetch-user: ?1' \ -H 'sec-fetch-dest: document' \ -H 'referer: https://www.chromium.org/' \ -H 'accept-language: en-US,en;q=0.9,ar;q=0.8,tr;q=0.7' \ -H 'cookie: _ga=GA1.2.579855328.1643135453; _gid=GA1.2.1955147752.1645531691' \ --compressed \ --proxy http://proxy_server:proxy_port --proxy-user proxyusername:proxypassword \ --insecure \ --cookie /path/to/cookiefile.txt \ --cookie-jar /path/to/cookiefile.txt \ --user-agent "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36" https://The_Target_URL

You can take a look at the cURL PHP library by visiting the tutorial: PHP Lib cURL workshop and action tips